At the invitation of the School of Management and Economics, Chai Yidong, a researcher from the School of Management of Hefei University of Technology, gave an academic report entitled "Research on Robust Artificial Intelligence Methods Considering Anti attack Threats" at the meeting room 317 of the main building at 11:00 a.m. on March 18, 2023. The presentation was presided over by Secretary Yan Zhijun, and many teachers and students from the college participated in the presentation.

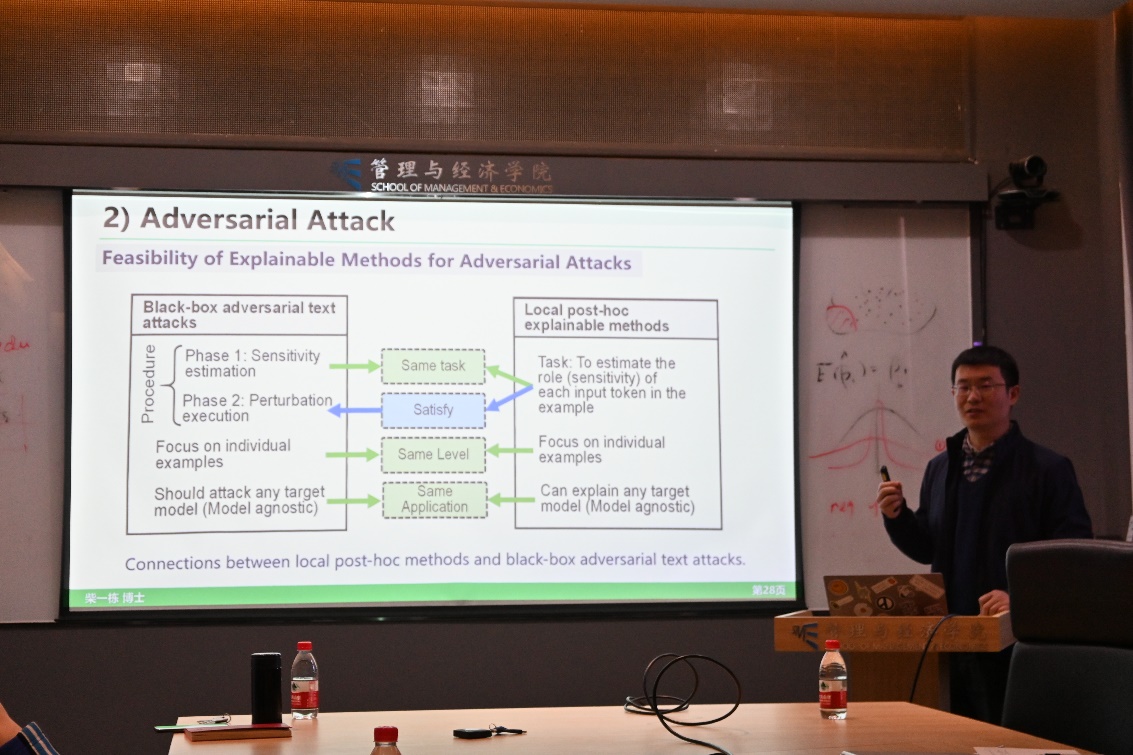

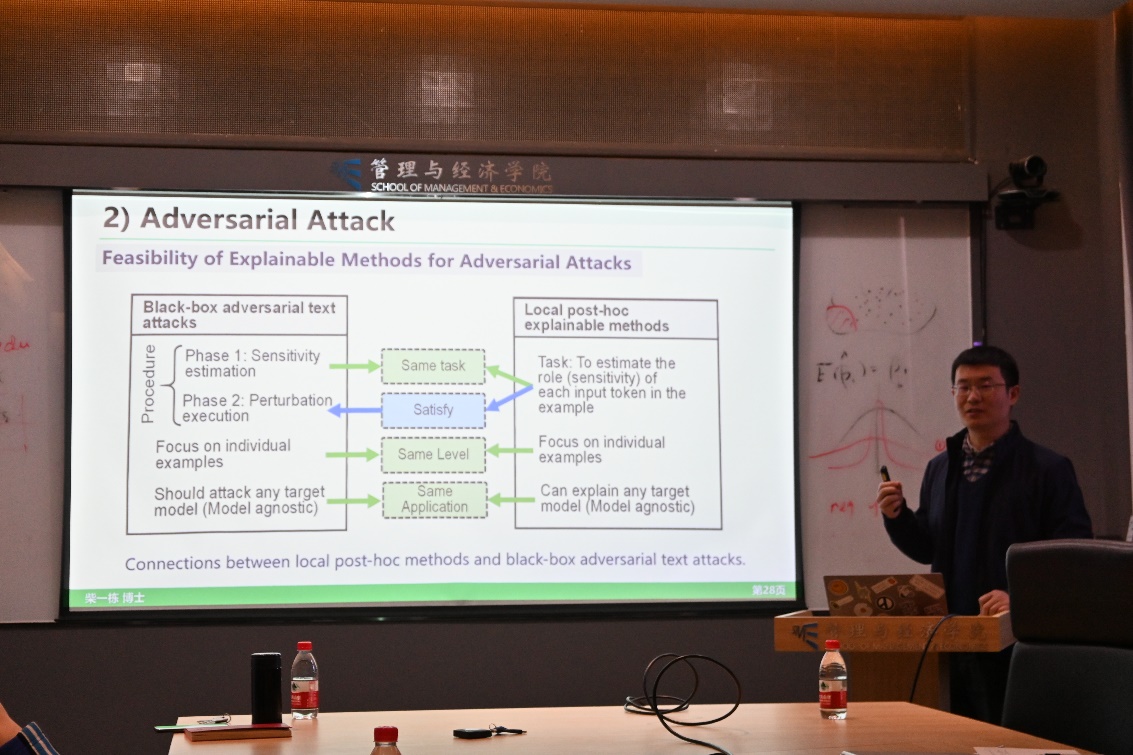

At the beginning of the report, Chai Yidong first revealed the security vulnerabilities of current intelligent model methods through cases such as image recognition and text recognition. Among them, Adversarial Attack generates Adversarial Samples by slightly perturbing the original samples to deceive the intelligent model, thereby posing a serious threat to the security of the intelligent model. Therefore, Chai Yidong elaborated on how to evaluate the ability of intelligent models to resist adversarial attacks (adversarial robustness) and how to improve the adversarial robustness of intelligent models based on the Technology Threat Avoidance Theory (TTAT).

柴一栋简介:

柴一栋,合肥工业大学研究员,博士生导师。博士毕业于清华大学经管学院管理科学与工程系,本科毕业于12BET信息管理与信息系统专业,主要关注如何设计创新性的人工智能方法,更好地服务于个人、组织和社会的现代科学化管理,研究领域包括信息系统安全与网络空间管理(医联网安全等)、智慧医疗管理、商务智能管理等。以第一作者或通讯作者发表研究成果于MISQ、ISR、JMIS、IEEE TDSC、IEEE TPAMI等国际管理学/计算机科学顶刊。荣获国际信息系统权威会议WITS 2021 best paper award、清华大学优秀博士论文等荣誉。

Finally, Chai Yidong summarized the application of intelligent models in resisting adversarial attacks in management, and pointed out that management organizations should improve the confidentiality of model design information and training datasets of intelligent models, and improve the ability of intelligent models to resist adversarial attacks by limiting access times.

After the report, the attending teachers and students had a positive discussion with Chai Yidong, which received a lot of inspiration. The report received a warm response and received unanimous praise from teachers and students.

Chai Yidong pointed out that evaluating the ability of intelligent models to resist adversarial attacks (adversarial robustness) can start with the design of indicators and sample selection. The indicators can be designed through relative robustness (PerformanceRatio) and Area Under the Performance-perturbation Curve, and a new interpretable adversarial sample generation framework (XATA) is proposed for sample selection. In terms of improving the adversarial robustness of intelligent models, Chai Yidong designed a Bayesian based integrated model by integrating multiple models, which can resist most adversarial attacks.